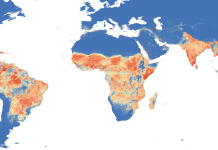

The BYTE project researched the positive and negative societal impacts associated with big data. As part of this research it considered how big data affects privacy and how data protection law affects big data practices. The results of this research can also be applied more specifically to text and data mining (TDM), as big data is an umbrella term covering a range of technologies like cloud computing and TDM. Here I highlight some observations concerning data protection and TDM.

The BYTE project researched the positive and negative societal impacts associated with big data. As part of this research it considered how big data affects privacy and how data protection law affects big data practices. The results of this research can also be applied more specifically to text and data mining (TDM), as big data is an umbrella term covering a range of technologies like cloud computing and TDM. Here I highlight some observations concerning data protection and TDM.

Data protection is said to hamper text and data mining. This must not be a surprise: data protection law hampers any processing of personal data insofar as it regulates such data processing. The basic principle of EU data protection law is that processing of personal data is not free but requires a legal ground and is only allowed when it complies with the principles of data protection law. Assessing the tension between TDM and data protection further leads to the following questions:

- Which data protection principles or rules cause the main obstacles and how TDM practices can be made compliant with data protection law?

- Do some of these rules cause excessive obstacles for TDM which can be avoided by using other legal protection mechanisms?

In this text, I will touch upon both questions. We will see that TDM is in general possible within the data protection framework, but its use is limited and regulated just like other processing operations with personal data. However, the protection of the data subject through consent requirements poses problems as it is a protection mechanism which is difficult to scale. Therefore switching to and enabling of aggregated protection mechanisms is advisable. Privacy by design and the development of standardized, privacy-friendly TDM practices can be a way forward.

TDM and purpose limitation

The main obstacle raised by data protection law is the purpose limitation principle. Sometimes this is presented as making TDM impossible, as data mining aims to discover unknown relations and lives from combining datasets, which often are collected for other purposes. I think this view exaggerates the problem. We are not confronted with an unsolvable contradiction between data mining and the purpose limitation principle. The fact that data mining does not test a prior hypothesis like in traditional statistics but may uncover unknown relations, does not mean it is done at random. In general it is a processing that is done with a purpose that can be explicitly formulated. The question if a dataset can be used for a new purpose which was originally not foreseen at the time of collection, can also occur when using traditional statistical methods. But with the development of cheap and large scale computing power and storage, the long term storage and reuse of data became more accessible and useful. The really new element is that datasets are not isolated silos anymore, but can be linked and recombined for several purposes much more easily. Data protection exactly aims at regulating such data flows.

Article 5(b) GDPR states that personal data shall be “collected for specified, explicit and legitimate purposes and not further processed in a manner that is incompatible with those purposes”. This principle has 2 building blocks. First purpose specification or the principle that personal data may only be collected for specified, explicit and legitimate purposes. Second that further use or processing is only allowed when not incompatible with the original purposes.

Important is that further use for other purposes is not forbidden as such, but only when the purposes of this further use are incompatible with the original purposes. The GDPR does not impose a requirement of the same or a similar purpose, but rather prohibits incompatible purposes. This limits further processing of personal data, but also provides space for processing with altered and even new purposes. The compatibility of the original purpose and the purpose of the further processing has to be assessed. In some cases this can lead to the conclusion that even a change of purpose is permissible.

This compatibility assessment allows a risk-based application of data protection. It makes clear that further use of personal data for TDM is possible, but the purpose of this data mining and the safeguards provided limiting the further use and transfer of this data will be decisive.

The need for scalable, aggregated control mechanisms

However, the current data protection framework has other problematic aspects. It applies an individual control model to personal data which is still very much grafted on individual transactions. Control mechanisms based on individual transactions do not scale well with a larger amount of interactions and lead to high transaction costs. Or they become dysfunctional, or they present barriers to big data practices, and to TDM in particular. This situation is not specific for data protection. We can notice similar problems in the copyright framework, where each use of a protected work has to be authorized by the right holder. However, as these problems with copyright and its ancillary rights did rise already long before the Internet, aggregated mechanisms have been developed through licensing schemes and collective rights management (although these have not very well adapted to an Internet or data economy either).

Similarly, these control mechanisms in the data protection framework need to be substituted for collective or aggregate mechanisms of control and decision-making. This implies a shift from an individual control model, where the individual decides about each interaction, to a liability model, in which decision-making during operations happens more collectively or aggregated and the active role of the individual gets reduced to receiving compensation or obtaining a claim towards the data controller.

An example is consent as ground of legitimation. Consent has been criticized as not effective any more as a protective mechanism. Often it is not only a nuisance for the data controller but also for the data subject itself. A typical example is the consent requirement for cookies, which generally leads to clicking it away as an annoying hurdle for access to a website. Further, data controllers can easily shift their responsibility by presenting long privacy notices containing an agreement with a very broad and vague range of further uses and acting as liability disclaimers. Data subjects are often not in a position to read or even understand such notices. A broadly formulated consent is also used by data controllers to avoid having to return too much to the data subject for a renewed consent, which again hollows out the efficiency of consent as a safeguard. In the context of data mining the need to contact a large amount of data subjects to obtain consent can be a disproportionate cost and effectively inhibit certain data mining practices, even when they can be beneficial for the data subject (e.g. in health research). Therefore consent would best be limited to situations which seriously can affect the data subject, and in general be substituted by collective or aggregated safeguards.

Control by regulators can be such a collective alternative for consent. Data protection can become more similar to regimes of product security and liability, like the authorization of drugs and chemicals. This implies also a strict regulation and standardization of risk assessments.

A specific aggregate solution can be found in privacy by design. Privacy by design aims to assure that privacy concerns are addressed already in the design phase. This also implies that to a large extent aggregated risk and legal assessments of the processing are made in this design phase. During the actual processing of personal data the decision-making can remain limited, which reduces transaction costs. Standardization is then a further collective process reducing transaction costs by creating well-understood common solutions. The information processing in individual decision-making gets reduced to deciding about which solution to choose from a set of well-understood options.

The challenge for the TDM community is therefore to develop privacy-friendly TDM practices. This can concern technical measures, like data mining on encrypted data, and build further on statistical disclosure control techniques. But it can also concern organizational (e.g. clear role descriptions with access rights) and legal (e.g. confidentiality and use limitation clauses) measures, limiting access, transfer and use. TDM practices can be standardized, similar to what is done with clinical trials, but now aimed at personal data protection.

As made clear above the GDPR provides space for a risk-based implementation. Development of such privacy-friendly TDM practices will enable to substitute or reduce the consent requirement for further use in a range of use cases.

We can conclude that data protection presents indeed hurdles for TDM. But this is not a contradiction impossible to solve, but rather a tension which needs to be made productive towards further development of TDM practices.

We can conclude that data protection presents indeed hurdles for TDM. But this is not a contradiction impossible to solve, but rather a tension which needs to be made productive towards further development of TDM practices.

A more detailed analysis of the tensions between big data, including TDM, and data protection and other legal frameworks can be found in the BYTE deliverable D4.2 Evaluating and addressing positive and negative societal externalities.

The BYTE Project will be at Privacy & Date Protection (CPDP) conference in Brussels. We look forward to an engaging discussion at the conference.

// All blog posts are the personal opinion of the bloggers. For more information see FutureTDM's DISCLAIMER on how we handle the blog. //